Big Data Platform: Improve Your Odds with Predictability

By Bhagvan Kommadi

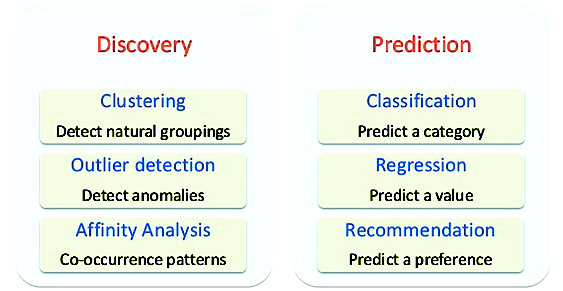

Big Data platform has discovery and prediction consists of Clustering, Outlier detection and affinity analysis. Clustering is detecting natural groupings. Outlier detection is detecting anomalies. Affinity analysis is identifying co-occurrence patterns. Classification, Regression, Recommendation is part of Prediction process. Classification is predicting categories. Regression is predicting value. Recommendation is predicting preferences. Enterprises are focusing on big data initiatives towards tactical business objectives, product information management, performance management, business execution correction, innovation through new products and predictive capabilities.

In the recent years, many companies are focusing on big data initiatives towards tactical business objectives, product information management, performance management, business execution correction, innovation through new products and predictive capabilities. Enterprises are enhancing their capabilities for establishing data gathering and assembly guidelines, guidelines for external data sharing, data security privacy, alignment of new product releases with customer preferences, it expertise to solve big data analysis and performance data analysis. Performance and Breakdown of big data solutions are the bottleneck points. Enterprises have traditionally faced complexity in handling data sizes, scale, extent, speed, efficiency, complexity and different formats.

Big Data complexity is how to store large data and access it. Speed is how fast the data is captured, processed, analyzed and delivered. Memory computing processes help support real time data processing and delivery of intelligence to predict patterns in big data. Big data frameworks have evolved to support large data processing, parallelizing the data tasks, data cleansing and storage of data. Reporting and analytics related capabilities have been transformed to decision making, forecasting and predicting tools.

Predictive analytics platforms are next generation technology platforms in enterprise IT. They provide targeted, relevant predictive analytics to business users. Business users can anticipate and react to emerging trends. Platforms help reducing and managing risk, forecast specific behavior, preemptively acting on trends. Up-sell increases and churn will decrease. Big data Predictive Platform targets multiple channels, social networks and devices to access information through events. Events occur in real time and get generated by various applications. Events have to be captured in a database or in memory to be processed and information has to be sent across the application layer to different devices and channels (web, desktop, kiosk and mobile). Every event has context associated with it. Context will be typically what, why, who, where, when related attributes. The solution patterns related to event processing are sequential, aggregate, on-off, missing, window, correlations and nested events. Predictive event modelling, time series modelling and scatter plot smoothing are the predictive analytics features in the platform.

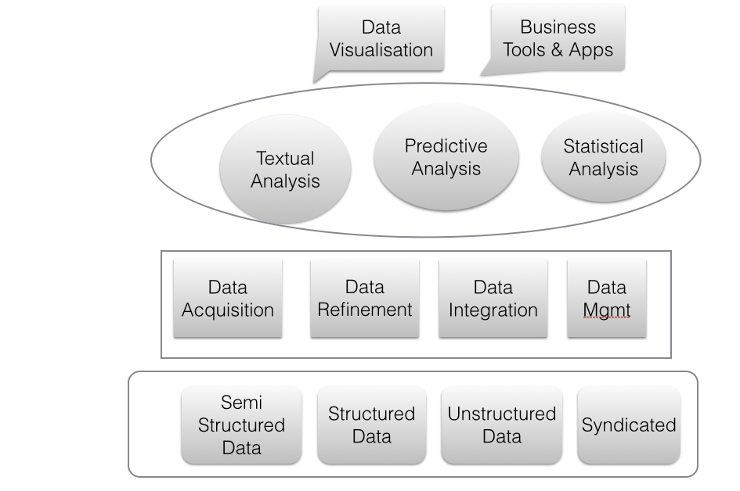

A Big data platform has operational services, data services and enterprise readiness services to provide High availability, Disaster Recovery, Security. Data Visualization apps and Business Tools use Textual analysis, predictive analysis and statistical analysis services. Data Acquisition, Data Refinement data integration and data management is part of Data services layer. Semi structured Data analysis, Structured and Unstructured Data and Syndicated Data is part of Data Repository Layer. The best practices followed during big data analysis are evolution of a legacy big data environment, having sandbox and production box, backup and archiving, having multiple cache for increasing latency, master data management and data cleansing.

The best practices followed during big data analysis are evolution of a legacy big data environment, having sandbox and production box, backup and archiving, having multiple cache for increasing latency, master data management and data data into a single high-quality, on-demand source using a “one touch” master-data collection process (MDM). Enterprises need to have a pilot program in advanced analytics to act as an incubator for developing big-data capabilities in its business units and creating a path to additional growth.

Big data prototyping need to be on public cloud as it can be scaled instantly. After prototyping is done, big data solution is moved to private cloud. Boundary crash can be avoided by implementing far limits on scalability. Streaming data analytics are implemented for specific applicable cases. Data world is modelled by dividing the data into dimensions and facts. Separate Data are integrated using separate data sources. Structured and unstructured data are integrated. Name valued pair data sets are stored in noSQL data sources.

Big data governance consists of data quality, metadata management, master data management, privacy, security and compliance. IT need to work with management and support the cross-organizational cooperation. Private data need to be secured and shared data will be shared to third parties, vendors, institutions and other enterprises. Roles are identified within enterprise for data stewards, sponsors, program drivers and users.